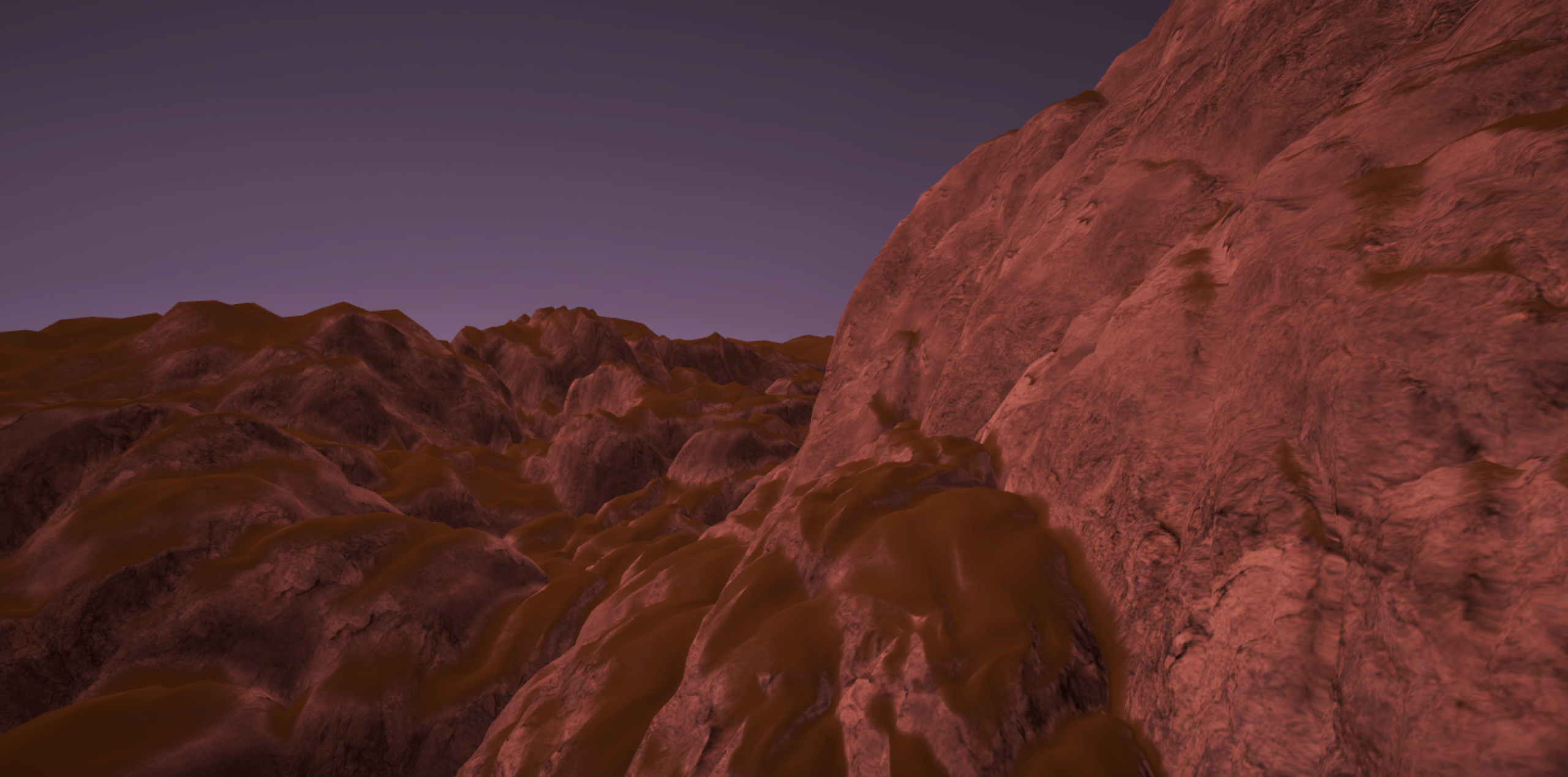

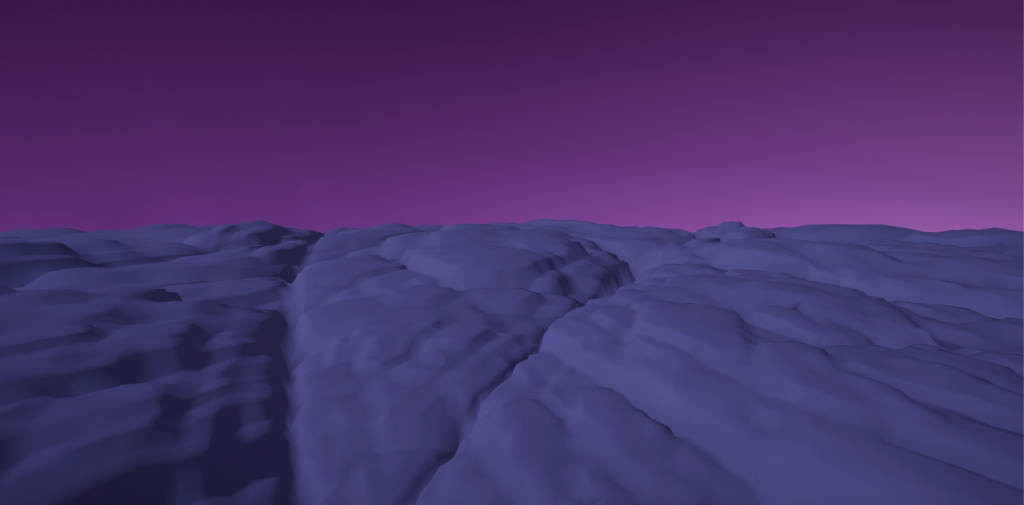

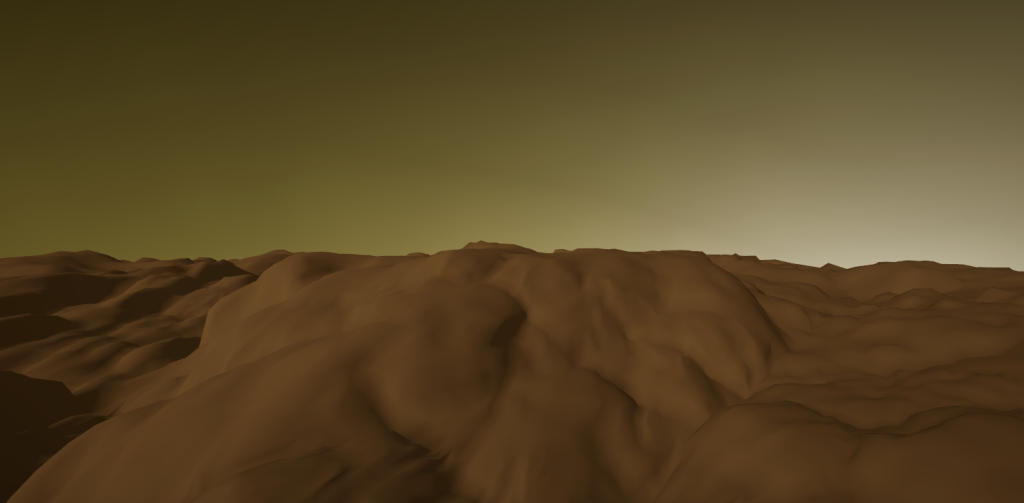

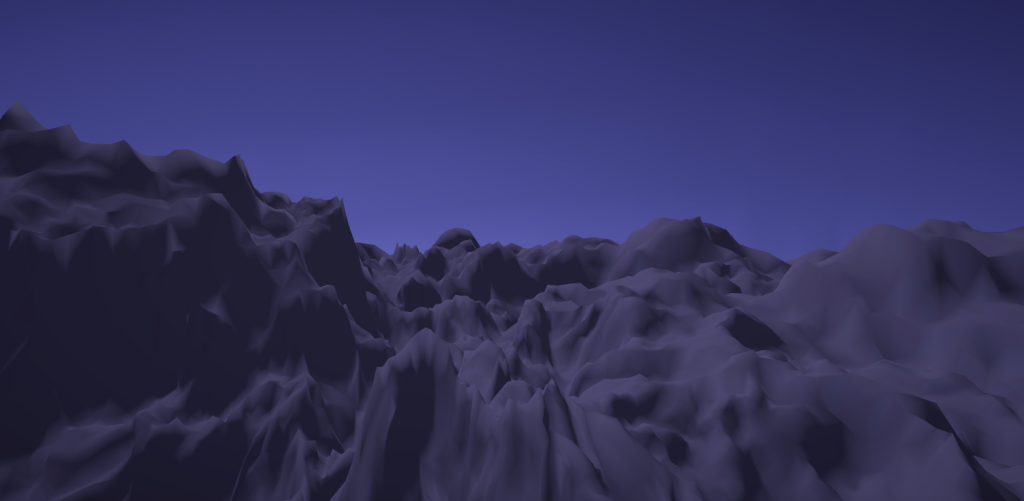

I implemented the terrain generation technique outlined in Real‑Time Hyper‑Amplification of Planets (Cortial et al., 2020) within Unity. The system adaptively subdivides a planet’s mesh in real time, enabling detailed terrain without overwhelming memory or the GPU. A custom set of production rules generates realistic landforms such as mountains, valleys, and plateaus, creating planets that are both vast in scale and rich in detail.

The full implementation is maintained privately in Unity version control. I’m happy to provide code excerpts or discuss the architecture upon request.

Introduction

In the realm of 3D graphics, Democritus was wrong: the universe’s fundamental building block isn’t the atom, it’s the triangle (he was wrong about the real world, too). In most real-time rendering pipelines, objects are assembled from countless triangles. Now imagine rendering a to-scale planet where, at ground level, you can make out every mountain ridge and winding river. Pull back until the entire sphere fits on screen, and all that intricate terrain still exists in memory. The result? An astronomical number of triangles—disastrous for both performance and memory.

But here’s the catch: when you’re zoomed out, you don’t need the same microscopic precision. What if the number of triangles adapts to your distance from the surface? As you descend, the terrain could gradually refine itself, revealing more features and complexity the closer you get. This is the essence of dynamic level of detail (LOD) rendering. By adapting the mesh’s resolution to the viewer’s distance, we can preserve fine surface features when needed while avoiding the overwhelming memory and processing demands of rendering them at all times. From orbit, the planet can be represented with a coarse, low-polygon mesh, but as the camera descends, new geometry is introduced to reveal mountains, valleys, and rivers with increasing fidelity. The challenge lies in generating and refining this mesh seamlessly, without visual popping or performance stalls, so that the transition from space to ground feels continuous and natural.

Low Resolution Generation

First, a low-resolution mesh is generated to serve as the baseline model when the planet is fully zoomed out. The mesh’s vertices are placed using a Poisson disk distribution, which spaces points relatively evenly while preserving natural irregularities. To improve performance, I optimized the sampling process with a spatial grid hashmap, allowing for faster distance checks compared to the naïve method of comparing each candidate point against all existing points. Next, a spherical Delaunay triangulation is applied to the sampled points, minimizing the number of extremely acute triangles. The resulting mesh contains triangles that are nearly, but not perfectly, regular: an ideal structure for subsequent terrain generation.

Adaptive Subdivision

How do we subdivide the mesh in real time? The short answer: compute shaders. Compute shaders are programs that run on the GPU, allowing for parallel processing of general-purpose computations. So, if we were to package the vertex, edge, and face data of the planet mesh into buffers, we can perform subdivision very, very quickly.

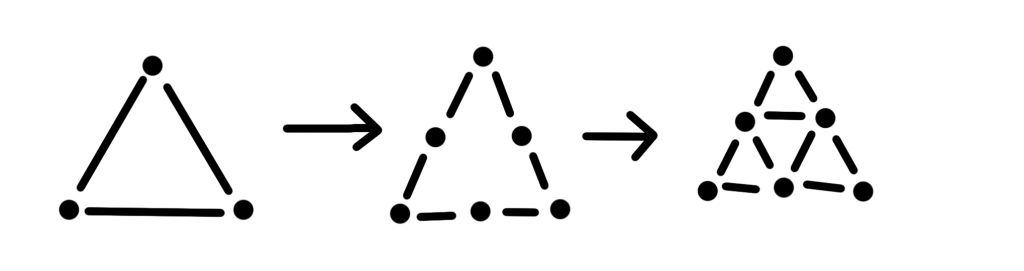

In a subdivision step, every triangle within a certain distance of the camera is split into four smaller triangles through the following process:

- Triangles close enough are marked for subdivision.

- Subdividing edges are split into two edges with insertion of 3 vertices.

- 3 more edges are added connecting the new vertices, forming 4 faces.

In each subdivision step, the distance threshold for triggering further subdivision is halved. While each subdividing triangle ultimately produces four times as many final triangles after subdivision, the number of triangles eligible for subdivision at each level drops by roughly a factor of four. This balance keeps the total triangle count growing at a steady, controlled rate rather than exploding exponentially.

Because procedural position changes can create gaps at the borders between subdividing and non-subdividing triangles, additional “ghost” vertices and edges are introduced. By handling the specific cases for triangles with one or two subdividing neighbors, these adjustments ensure the terrain remains seamless and crack-free.

Terrain Features

A set of subdivision rules governs both the generation of terrain details and the shaping of specific landform patterns. These rules are driven by vertex height, level of detail, and a collection of control parameters assigned to each vertex, such as crust elevation, wetness, and orogeny age, that determine the type of relief formed at different locations. The control parameters are initialized from user-defined control maps (images mapped to the planet’s surface), enabling targeted control over whether a region develops spiky mountains, rounded peaks, gentle hills, or broad plains. To introduce natural variation, pseudorandom values are incorporated, seeded deterministically to ensure consistent terrain features across subdivision frames.

Future Additions

This project still has plenty of untapped potential. One major next step is implementing the river and lake generation described in the paper, an addition that would also give me an opportunity to explore stylized water shading. I also plan to enhance terrain texturing by incorporating stochastic tiling and blending patterns influenced by the control parameters. Finally, I aim to refine the atmospheric effects, potentially adding dynamic cloud generation.